Load Balancer: 7 Powerful Benefits You Can’t Ignore

Ever wondered how websites handle millions of users without crashing? The secret lies in a powerful tool called a Load Balancer. It’s the unsung hero behind smooth online experiences, quietly distributing traffic to keep everything running fast and reliable.

What Is a Load Balancer and How Does It Work?

A Load Balancer acts as a traffic cop for your network, intelligently directing incoming client requests across multiple servers. This ensures no single server gets overwhelmed, improving both performance and reliability. Whether you’re running a small web app or a global e-commerce platform, a Load Balancer is essential for maintaining uptime and speed.

The Core Function of a Load Balancer

At its heart, a Load Balancer’s job is to distribute workloads—like HTTP requests, database queries, or API calls—across a pool of backend servers. This distribution prevents bottlenecks and ensures optimal resource utilization. Think of it as a smart router that doesn’t just forward data, but decides the best path based on real-time conditions.

- Distributes incoming network traffic across server farms

- Monitors server health and removes failed instances

- Enables seamless scaling during traffic spikes

Types of Load Balancers: From Hardware to Software

Load Balancers come in different forms, each suited to specific environments. Traditional hardware-based load balancers, like those from F5 or Citrix, offer high performance but come with high costs and limited flexibility. On the other hand, software-based solutions such as NGINX, HAProxy, or cloud-native options like AWS Elastic Load Balancer (ELB) provide scalability and cost-efficiency.

- Hardware Load Balancers: Physical devices with dedicated processors (e.g., F5 BIG-IP)

- Software Load Balancers: Run on standard servers (e.g., NGINX, HAProxy)

- Cloud Load Balancers: Fully managed services (e.g., Google Cloud Load Balancing, Azure Load Balancer)

“A Load Balancer isn’t just about spreading traffic—it’s about making your infrastructure resilient.” — Cloud Architecture Best Practices, AWS Documentation

Why Every Modern Application Needs a Load Balancer

In today’s digital-first world, downtime equals lost revenue and damaged reputation. A Load Balancer is no longer a luxury—it’s a necessity. From startups to enterprises, any application expecting consistent traffic should implement load balancing to ensure high availability and fault tolerance.

Ensuring High Availability and Fault Tolerance

One of the most critical roles of a Load Balancer is maintaining application uptime. By continuously monitoring server health, it can automatically reroute traffic away from failed or unresponsive servers. This means if one server crashes, users won’t even notice—traffic is instantly shifted to healthy nodes.

- Health checks detect server failures in real time

- Automatic failover prevents service disruption

- Supports zero-downtime deployments

Scaling Applications Seamlessly

As your user base grows, so should your infrastructure. A Load Balancer enables horizontal scaling—adding more servers instead of upgrading existing ones. This is especially crucial in cloud environments where auto-scaling groups dynamically add or remove instances based on demand.

- Integrates with auto-scaling systems (e.g., AWS Auto Scaling)

- Handles sudden traffic surges during promotions or viral events

- Reduces the need for over-provisioning resources

Types of Load Balancing Algorithms Explained

Not all Load Balancers distribute traffic the same way. The method they use—called a load balancing algorithm—can significantly impact performance. Choosing the right algorithm depends on your application’s architecture, traffic patterns, and performance goals.

Round Robin and Weighted Round Robin

The Round Robin algorithm is the simplest and most widely used. It distributes requests sequentially across servers, like dealing cards in a circle. While fair, it doesn’t account for server capacity or current load.

Weighted Round Robin improves on this by assigning weights to servers based on their processing power or capacity. A server with twice the RAM might get twice as many requests.

- Ideal for homogeneous server environments

- Easy to implement and predict

- May not adapt well to dynamic loads

Least Connections and Weighted Least Connections

This algorithm routes new requests to the server with the fewest active connections. It’s perfect for long-lived sessions or applications with variable processing times, ensuring that no single server gets bogged down.

Weighted Least Connections adds server capacity into the equation, giving more capable servers a higher share of the load.

- Best for applications with persistent connections (e.g., video streaming)

- Adapts dynamically to server load

- Reduces response time during peak usage

IP Hash and URL Hash Methods

IP Hash uses the client’s IP address to determine which server receives the request. This ensures session persistence—useful when user data is stored locally on a server.

Similarly, URL Hash directs requests based on the requested URL, making it ideal for content delivery networks (CDNs) or caching layers.

- Maintains session stickiness without cookies

- Useful for stateful applications

- Can lead to uneven distribution if traffic sources are limited

Load Balancer in Cloud vs On-Premise Environments

The rise of cloud computing has transformed how Load Balancers are deployed and managed. While on-premise solutions offer control, cloud-based Load Balancers provide unmatched scalability and ease of management.

Cloud-Based Load Balancers: Flexibility and Scalability

Cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer fully managed Load Balancer services. These are highly scalable, automatically adjusting to traffic demands without manual intervention.

For example, AWS Elastic Load Balancing supports Application, Network, and Gateway Load Balancers, each tailored for different use cases.

- No upfront hardware costs

- Auto-scaling integration

- Global load distribution with multi-region support

On-Premise Load Balancers: Control and Security

Some organizations prefer on-premise Load Balancers for greater control over their infrastructure and enhanced security. These are often used in regulated industries like finance or healthcare, where data sovereignty is critical.

However, they require significant investment in hardware, maintenance, and skilled personnel.

- Full control over configuration and updates

- Better compliance with internal security policies

- Higher operational complexity and cost

Advanced Load Balancer Features You Should Know

Modern Load Balancers go beyond simple traffic distribution. They offer advanced features that enhance security, performance, and observability, making them a central component of any robust IT architecture.

SSL/TLS Termination and Offloading

Encrypting traffic with SSL/TLS is essential for security, but it’s computationally expensive. A Load Balancer can handle SSL termination—decrypting incoming traffic before sending it to backend servers—freeing them to focus on application logic.

- Reduces CPU load on application servers

- Centralizes certificate management

- Improves overall system performance

Content-Based Routing and Path Rules

Advanced Load Balancers can inspect HTTP headers and URLs to route requests based on content. For example, all /api/ requests can go to one server group, while /images/ goes to a CDN or image-processing cluster.

- Enables microservices architecture

- Supports A/B testing and canary deployments

- Improves user experience through intelligent routing

Global Server Load Balancing (GSLB)

GSLB extends load balancing across multiple data centers or cloud regions. It directs users to the nearest or least congested location, reducing latency and improving disaster recovery.

- Improves performance for global audiences

- Provides geographic redundancy

- Supports business continuity during outages

Common Load Balancer Challenges and How to Solve Them

While Load Balancers offer immense benefits, they also come with challenges. Misconfigurations, single points of failure, or improper algorithm selection can undermine their effectiveness.

Session Persistence and Sticky Sessions

In stateful applications, users must stay connected to the same server throughout their session. This is known as session persistence or sticky sessions. While useful, it can lead to uneven load distribution.

Solutions include using distributed session stores (like Redis) or enabling cookie-based persistence in the Load Balancer.

- Use Redis or Memcached for shared session storage

- Enable cookie-based stickiness in the Load Balancer

- Avoid sticky sessions in stateless microservices

Single Point of Failure Risks

If your Load Balancer fails, your entire application can go down. To prevent this, deploy redundant Load Balancers in an active-passive or active-active configuration.

- Use clustering or failover mechanisms

- Leverage cloud provider redundancy (e.g., AWS Multi-AZ)

- Monitor Load Balancer health with external tools

Latency and Performance Bottlenecks

While Load Balancers improve performance, poorly configured ones can introduce latency. This happens when health checks are too frequent, or when SSL offloading isn’t optimized.

- Optimize health check intervals

- Use HTTP/2 and keep-alive connections

- Monitor end-to-end latency with APM tools

Best Practices for Implementing a Load Balancer

Deploying a Load Balancer isn’t just about installation—it’s about strategic planning. Following best practices ensures you get the most out of your investment while avoiding common pitfalls.

Right-Sizing Your Load Balancer

Choose a Load Balancer that matches your traffic volume and growth projections. Over-provisioning wastes money; under-provisioning risks performance issues. Cloud-based solutions make this easier with pay-as-you-go models.

- Start small and scale as needed

- Use monitoring to predict future needs

- Consider burst capacity for seasonal traffic

Monitoring and Logging for Optimal Performance

Continuous monitoring is crucial. Track metrics like request rate, error rate, latency, and server health. Tools like Prometheus, Grafana, or cloud-native CloudWatch can help visualize performance.

- Set up alerts for high error rates or latency spikes

- Log all Load Balancer activity for auditing

- Use dashboards to monitor real-time traffic flow

Security Considerations with Load Balancers

Load Balancers are often the first line of defense. They should be configured with security in mind—enabling DDoS protection, WAF integration, and strict access controls.

- Integrate with Web Application Firewalls (WAF)

- Enable DDoS protection (e.g., AWS Shield)

- Restrict access via IP whitelisting or VPCs

Future Trends in Load Balancer Technology

As applications evolve, so do Load Balancers. Emerging trends like AI-driven traffic management, service mesh integration, and edge computing are reshaping how load balancing works.

AI and Machine Learning in Traffic Management

Future Load Balancers may use AI to predict traffic patterns and proactively scale resources. Machine learning models can analyze historical data to optimize routing decisions in real time.

- Predictive scaling based on user behavior

- Anomaly detection for security threats

- Self-optimizing routing algorithms

Service Mesh and Load Balancer Integration

In microservices architectures, service meshes like Istio or Linkerd handle internal service-to-service communication. They include built-in load balancing, reducing the need for traditional Load Balancers within the cluster.

However, external Load Balancers still play a role in managing ingress traffic.

- Istio uses Envoy as a sidecar proxy with load balancing

- Reduces complexity in internal routing

- Complements external Load Balancers

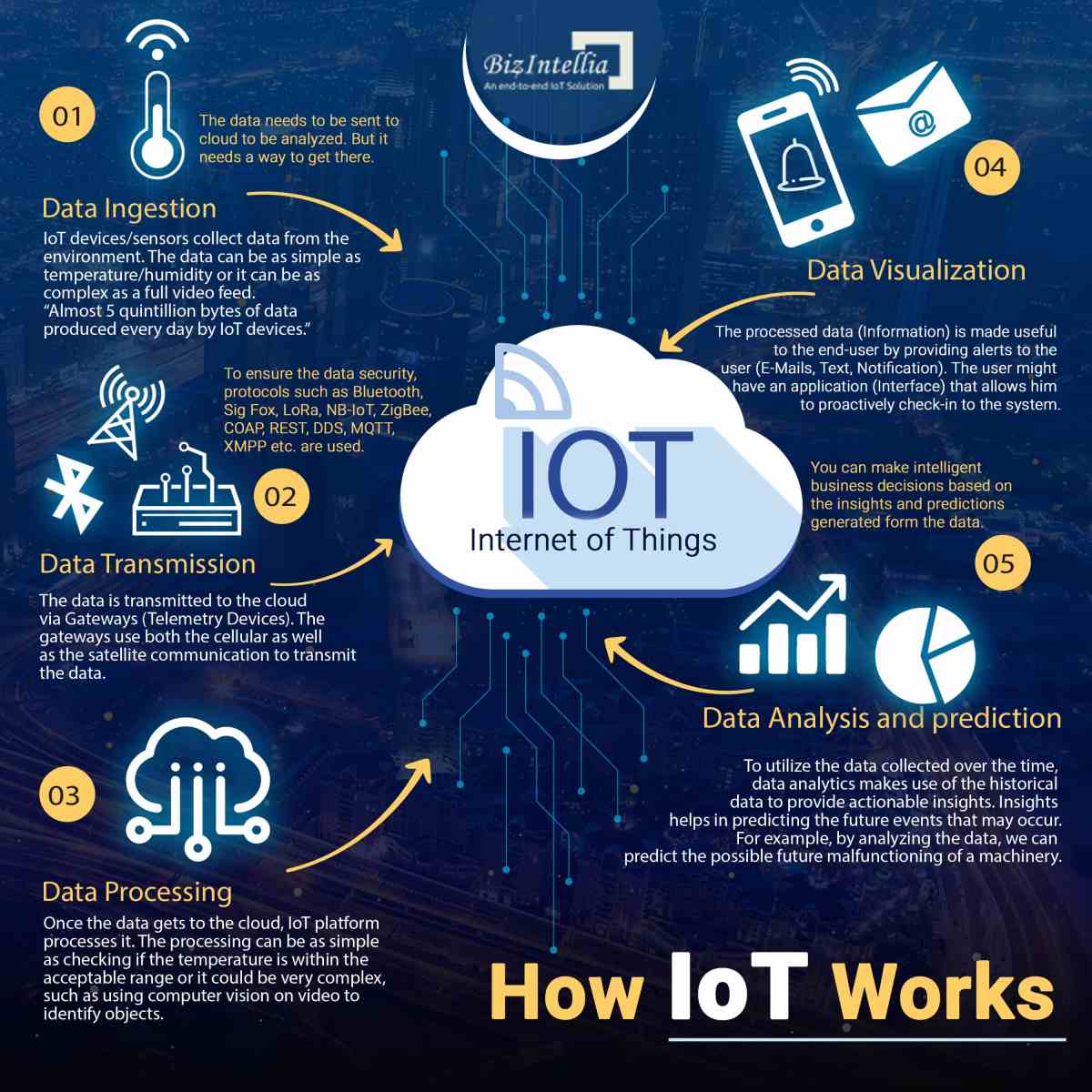

Edge Load Balancing for Low-Latency Applications

With the rise of IoT and real-time apps, edge computing is gaining traction. Load Balancers deployed at the edge can route requests to the nearest processing node, minimizing latency.

- Supports real-time gaming, AR/VR, and autonomous vehicles

- Reduces round-trip time to central data centers

- Enhances user experience in latency-sensitive apps

What is a Load Balancer?

A Load Balancer is a device or software that distributes network traffic across multiple servers to ensure no single server becomes overwhelmed, improving application availability, performance, and scalability.

What are the main types of Load Balancers?

The main types are hardware (physical devices), software (applications like NGINX), and cloud-based (managed services like AWS ELB). Each has its own use cases based on cost, scalability, and control.

Which load balancing algorithm is best?

There’s no one-size-fits-all answer. Round Robin works well for uniform traffic, Least Connections suits variable workloads, and IP Hash ensures session persistence. The best choice depends on your application’s needs.

Can a Load Balancer improve security?

Yes. Modern Load Balancers offer SSL termination, DDoS protection, and integration with Web Application Firewalls (WAF), making them a critical part of a secure architecture.

Do I need a Load Balancer for a small website?

For low-traffic sites, it may not be necessary. But if you expect growth, plan for high availability, or use multiple servers, a Load Balancer becomes essential for reliability and performance.

Load Balancer technology is more than just traffic distribution—it’s a cornerstone of modern, resilient, and scalable applications. From ensuring high availability to enabling global reach and enhancing security, its role continues to evolve with the digital landscape. Whether you’re running a simple web app or a complex microservices ecosystem, implementing a smart Load Balancer strategy is a powerful step toward reliability and performance. As cloud computing, AI, and edge networks advance, the Load Balancer will remain a vital tool in every architect’s toolkit.

Further Reading: