Azure Event Hubs: 7 Powerful Insights for Real-Time Data Mastery

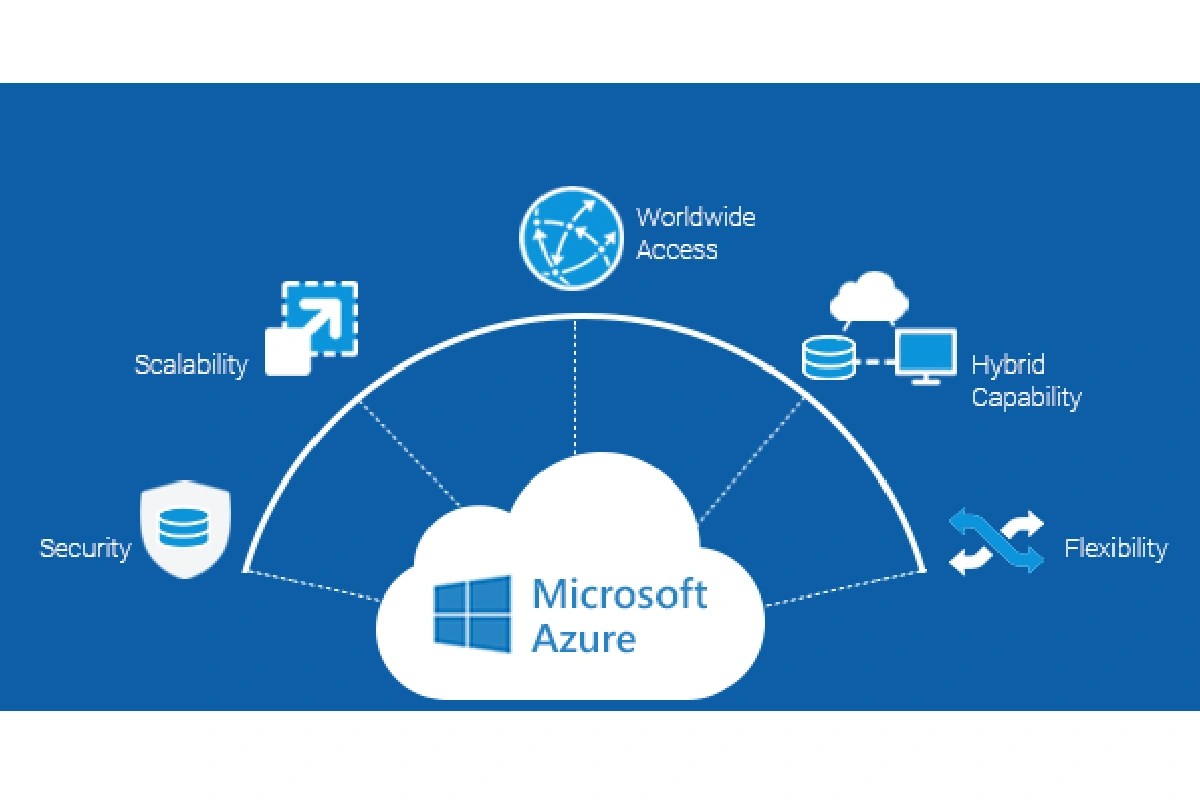

Imagine handling millions of events per second from IoT devices, apps, and services—all in real time. That’s where Azure Event Hubs shines, offering a robust, scalable, and secure event ingestion service in the cloud.

What Is Azure Event Hubs and Why It Matters

Azure Event Hubs is a fully managed, real-time data streaming platform and event ingestion service built by Microsoft for the cloud. Designed to handle massive volumes of telemetry and event data from diverse sources—like websites, mobile apps, IoT sensors, and backend systems—it serves as the front door for event-driven architectures in Azure.

At its core, Event Hubs acts as a distributed messaging system that decouples data producers from consumers. This allows systems to scale independently, ensuring reliability and performance even under extreme load. Whether you’re building a real-time analytics pipeline, monitoring system, or event-driven microservices, Azure Event Hubs provides the backbone for streaming data at scale.

Core Components of Azure Event Hubs

Understanding the architecture of Azure Event Hubs is essential to leveraging its full potential. The service is built around several key components that work together to enable high-throughput, low-latency event processing.

Event Producers: Applications or devices that send data (events) to Event Hubs.These can be IoT sensors, web servers, mobile apps, or backend services.Event Consumers: Applications that read and process events from Event Hubs.Examples include Azure Stream Analytics, Azure Functions, or custom consumers using the Event Processor Host library.Event Hubs Namespace: A scoping container that holds one or more Event Hubs instances.

.It provides a unique identifier and access control boundary.Partitions: Each Event Hub is divided into partitions, which are the fundamental units of scalability and parallelism.Events are distributed across partitions based on a partition key, enabling concurrent processing.Capture: A feature that automatically archives event data to Azure Blob Storage or Azure Data Lake Storage for long-term retention and batch processing.”Azure Event Hubs enables organizations to build responsive, data-driven applications by providing a reliable, high-scale event ingestion pipeline.” — Microsoft Azure DocumentationHow Azure Event Hubs Compares to Other Messaging ServicesWhile Azure offers several messaging services—such as Azure Service Bus, Azure Queue Storage, and Event Grid—it’s important to understand where Event Hubs fits in the ecosystem..

Unlike Service Bus, which is designed for enterprise messaging with advanced features like sessions, transactions, and message deferral, Azure Event Hubs is optimized for high-throughput event streaming. It’s built for scenarios where volume and velocity matter more than message-level guarantees.

Compared to Azure Queue Storage, which is a simple, cost-effective solution for basic queuing, Event Hubs supports complex event routing, partitioning, and real-time processing. And while Azure Event Grid is ideal for event notification and serverless event routing, it’s not designed for high-volume telemetry ingestion—this is where Event Hubs excels.

For a deeper comparison, Microsoft provides a detailed guide on messaging services in Azure, helping architects choose the right tool for their use case.

Key Features That Make Azure Event Hubs Stand Out

Azure Event Hubs isn’t just another messaging system—it’s engineered for performance, scalability, and integration. Its feature set is tailored for modern cloud-native applications that demand real-time insights from massive data streams.

High Throughput and Low Latency

One of the standout features of Azure Event Hubs is its ability to handle millions of events per second with minimal latency. This is achieved through a distributed architecture that scales horizontally across multiple nodes.

Throughput is measured in throughput units (TUs), with each TU supporting:

- 1 MB/sec ingress (data coming in)

- 2 MB/sec egress (data going out)

- 1,000 event sends per second

- Additional operations like management calls and consumer reads

You can start with a few TUs and scale up to hundreds, or opt for the dedicated tier for even greater capacity and isolation. This flexibility makes it suitable for both small-scale applications and enterprise-grade systems.

Event Retention and Replay Capability

Unlike traditional messaging systems that delete messages after consumption, Azure Event Hubs retains events for a configurable period—up to 7 days in the standard tier and up to 90 days in the dedicated tier.

This retention window allows consumers to replay events, which is invaluable for debugging, reprocessing data, or onboarding new analytics systems. For example, if a downstream system fails, you can restart it and replay events from a specific point in time without losing data.

This feature transforms Event Hubs from a simple message broker into a time-travelable event log, enabling powerful use cases in data lineage and auditability.

Kafka Compatibility and Hybrid Integration

Azure Event Hubs supports Apache Kafka 1.0 and later versions, allowing you to connect Kafka-native applications directly without any code changes. This means you can use existing Kafka producers and consumers to send and receive events from Event Hubs.

This compatibility is a game-changer for organizations migrating from on-premises Kafka clusters to the cloud. You can leverage Azure’s scalability and managed infrastructure while reusing your existing Kafka tooling, such as Kafka Connect, MirrorMaker, or consumer libraries.

To get started, Microsoft provides a comprehensive guide on using Kafka with Event Hubs, including configuration details and best practices.

Use Cases: Where Azure Event Hubs Shines

The true power of Azure Event Hubs becomes evident when applied to real-world scenarios. Its ability to ingest, buffer, and distribute massive event streams makes it ideal for a wide range of applications across industries.

IoT and Telemetry Data Ingestion

In the Internet of Things (IoT) landscape, devices generate vast amounts of telemetry data—temperature readings, GPS coordinates, sensor status, and more. Azure Event Hubs serves as the central ingestion point for this data, handling millions of device messages per second.

For example, a smart city project might use Event Hubs to collect data from traffic sensors, air quality monitors, and surveillance cameras. This data can then be processed in real time using Azure Stream Analytics or forwarded to Azure Databricks for machine learning models.

Integration with Azure IoT Hub is seamless—IoT Hub can route device-to-cloud messages directly to Event Hubs, enabling hybrid architectures where device management and event streaming are decoupled.

Real-Time Analytics and Monitoring

Businesses today demand real-time visibility into their operations. Azure Event Hubs enables real-time analytics by feeding event streams into processing engines like Azure Stream Analytics, Azure Synapse, or third-party tools like Apache Spark on HDInsight.

For instance, an e-commerce platform can use Event Hubs to capture user interactions—clicks, purchases, cart additions—and analyze them in real time to detect fraud, personalize recommendations, or monitor system health.

Similarly, IT operations teams can stream application logs and metrics into Event Hubs, then use Azure Monitor or Splunk to visualize dashboards and trigger alerts based on anomalies.

Event-Driven Microservices Architecture

Modern applications are increasingly built using microservices, where components communicate asynchronously via events. Azure Event Hubs acts as the central event bus, enabling loose coupling and scalability.

In this architecture, when a user places an order, the order service publishes an “OrderCreated” event to Event Hubs. Other services—like inventory, billing, and notification—subscribe to this event and react accordingly, without direct dependencies.

This pattern improves resilience and scalability, as each service can process events at its own pace and scale independently. It also simplifies versioning and deployment, as changes to one service don’t require changes to others.

How to Get Started with Azure Event Hubs

Setting up Azure Event Hubs is straightforward, whether you’re using the Azure portal, CLI, PowerShell, or infrastructure-as-code tools like ARM templates or Terraform.

Creating an Event Hubs Namespace and Hub

The first step is to create an Event Hubs namespace, which acts as a container for your Event Hubs. You can do this via the Azure portal:

- Navigate to the Azure portal and select “Create a resource”.

- Search for “Event Hubs” and select the service.

- Choose your subscription, resource group, and region.

- Enter a unique namespace name and select the pricing tier (Basic, Standard, or Dedicated).

- After the namespace is created, you can add one or more Event Hubs within it.

Each Event Hub can be configured with a specific number of partitions and message retention period. It’s important to plan your partition count carefully, as it cannot be changed after creation (though you can increase it in some cases using the dedicated tier).

Configuring Producers and Consumers

Once your Event Hub is set up, you need to configure producers to send events and consumers to process them.

Producers use connection strings or managed identities to authenticate and send events via HTTPS or the AMQP protocol. The Azure SDKs (available for .NET, Java, Python, Node.js, etc.) simplify this process with built-in libraries.

For consumers, you can use the Event Processor Host (EPH) library, which handles partition management, checkpointing, and load balancing automatically. Alternatively, you can use Azure Functions with Event Hubs triggers, which scales dynamically based on incoming events.

Microsoft provides sample code and tutorials on sending and receiving events, making it easy to get started with your preferred language.

Scaling and Performance Optimization

One of the biggest advantages of Azure Event Hubs is its ability to scale seamlessly with your workload. However, achieving optimal performance requires understanding how to configure and monitor your Event Hubs instance.

Understanding Throughput Units and Auto-Inflate

Throughput Units (TUs) are the primary scaling metric for Event Hubs. Each TU provides a certain amount of ingress and egress capacity. If your application exceeds the capacity of your current TUs, you may experience throttling.

To avoid this, Azure offers the Auto-Inflate feature, which automatically scales the number of TUs up to a specified maximum based on traffic. This ensures you never miss events during traffic spikes while keeping costs predictable.

However, Auto-Inflate only scales up—it doesn’t scale down. So, while it’s great for handling bursts, you should monitor usage and adjust the maximum limit to avoid over-provisioning.

Partitioning Strategies for Optimal Performance

Partitions are the key to parallelism in Event Hubs. Each partition can be consumed independently, allowing multiple consumers to process events concurrently.

When designing your system, choose a partition key that distributes events evenly across partitions. For example, using a device ID or user ID as the partition key ensures that events from the same source go to the same partition, preserving order within that stream.

However, if your partition key has low cardinality (e.g., only a few values), you may end up with “hot partitions” that receive most of the traffic, creating bottlenecks. To avoid this, use high-cardinality keys or let Event Hubs assign events to partitions randomly.

Monitoring and Diagnostics with Azure Monitor

To ensure your Event Hubs instance is performing well, integrate it with Azure Monitor. You can collect metrics like incoming requests, successful requests, throttled requests, and latency.

Set up alerts for key thresholds—for example, if the throttled request rate exceeds 5%, it may indicate you need more TUs. You can also enable diagnostic logs to capture detailed information about connections, operations, and errors.

Using Log Analytics, you can query these logs to troubleshoot issues or analyze usage patterns. For example, you might discover that a particular producer is sending malformed events or that a consumer is falling behind.

Security and Compliance in Azure Event Hubs

In enterprise environments, security is non-negotiable. Azure Event Hubs provides a comprehensive set of features to protect your data and ensure compliance with regulatory standards.

Authentication and Authorization

Event Hubs supports multiple authentication methods:

- Shared Access Signatures (SAS): Token-based authentication using connection strings with specific permissions (Send, Listen, Manage).

- Azure Active Directory (Azure AD): Role-based access control (RBAC) that integrates with your organization’s identity system. This is the recommended method for production environments.

- Managed Identities: Allows Azure resources (like VMs or Functions) to access Event Hubs without storing credentials.

By using Azure AD, you can assign granular roles—such as Event Hubs Data Sender or Event Hubs Data Receiver—to users and applications, ensuring least-privilege access.

Data Encryption and Network Security

All data in Azure Event Hubs is encrypted at rest using Microsoft-managed keys. You can also enable customer-managed keys (CMK) via Azure Key Vault for greater control.

For data in transit, Event Hubs uses TLS 1.2+ to secure communications between clients and the service.

To enhance network security, you can configure virtual network (VNet) service endpoints or private links to restrict access to Event Hubs from specific networks. This prevents public internet exposure and meets strict compliance requirements.

Compliance and Auditability

Azure Event Hubs is compliant with major standards such as GDPR, HIPAA, ISO 27001, and SOC 2. This makes it suitable for handling sensitive data in regulated industries like healthcare, finance, and government.

Additionally, integration with Azure Policy and Azure Security Center allows you to enforce security baselines and detect threats. You can also use Azure Blueprints to deploy compliant Event Hubs configurations across multiple environments.

Advanced Scenarios and Integrations

Beyond basic event ingestion, Azure Event Hubs supports advanced patterns that enable complex data workflows and hybrid architectures.

Event Hubs Capture: Bridging Streaming and Batch

Event Hubs Capture is a powerful feature that automatically archives event data to Azure Blob Storage or Azure Data Lake Storage. This creates a bridge between real-time streaming and batch processing.

For example, you might use Stream Analytics for real-time dashboards while simultaneously archiving data to Data Lake for historical analysis with Azure Databricks or Power BI.

Capture can be configured with a time or size interval (e.g., every 5 minutes or every 100 MB), and it supports Avro format for efficient storage and schema preservation.

Learn more about setting up Capture in the official Event Hubs Capture documentation.

Integration with Serverless Computing

Azure Functions and Logic Apps integrate seamlessly with Event Hubs, enabling event-driven serverless architectures.

With Azure Functions, you can create a function triggered by incoming events in Event Hubs. The runtime automatically scales the function based on event volume, and the Event Hubs extension handles checkpointing and partition management.

This is ideal for lightweight processing tasks—like sending notifications, updating databases, or filtering events—without managing infrastructure.

Similarly, Logic Apps can consume events from Event Hubs and orchestrate workflows across multiple services, such as sending emails, updating SharePoint, or calling external APIs.

Disaster Recovery and Geo-Replication

For mission-critical applications, Azure Event Hubs supports geo-disaster recovery through geo-replication. You can pair two namespaces in different regions and automatically fail over if the primary region becomes unavailable.

This ensures business continuity and data durability, even in the face of regional outages. Failover can be triggered manually or automated using health probes and Azure Monitor alerts.

Microsoft details this capability in the geo-replication documentation, including setup steps and recovery procedures.

Best Practices for Using Azure Event Hubs

To get the most out of Azure Event Hubs, follow these proven best practices that cover design, performance, security, and cost optimization.

Design for Scale and Resilience

Plan your Event Hubs deployment with scalability in mind. Choose the right number of partitions based on your expected throughput and consumer count. Remember that partition count is fixed after creation (except in dedicated tier), so over-provision slightly if unsure.

Use partition keys wisely to ensure even distribution and preserve ordering where needed. Avoid using the same partition key for all events, as this creates a bottleneck.

Implement retry logic in your producers and consumers to handle transient failures. Use exponential backoff to avoid overwhelming the system during outages.

Monitor and Optimize Costs

Regularly monitor your Event Hubs metrics to identify underutilized resources. If you’re consistently using only a fraction of your TUs, consider downgrading or using Auto-Inflate to optimize costs.

Use the dedicated tier for large-scale, predictable workloads, as it offers better price-performance and additional features like Kafka mirroring and VNet support.

Enable Capture only when needed, as it incurs additional storage costs. Consider data retention policies to avoid storing events longer than necessary.

Secure by Default

Always use Azure AD for authentication in production environments. Avoid hardcoding connection strings in your applications—use Azure Key Vault to store secrets securely.

Enable private endpoints to restrict network access and encrypt data with customer-managed keys for sensitive workloads.

Regularly review access roles and audit logs to detect unauthorized access or misconfigurations.

What is Azure Event Hubs used for?

Azure Event Hubs is used for ingesting and processing massive streams of real-time data from sources like IoT devices, applications, and sensors. It’s ideal for scenarios such as real-time analytics, monitoring, event-driven architectures, and telemetry ingestion.

How does Azure Event Hubs differ from Service Bus?

While both are messaging services, Event Hubs is optimized for high-throughput event streaming and telemetry, whereas Service Bus is designed for enterprise messaging with features like sessions, transactions, and message deferral. Event Hubs handles millions of events per second, while Service Bus focuses on reliable, ordered message delivery for smaller volumes.

Can I use Kafka with Azure Event Hubs?

Yes, Azure Event Hubs is fully compatible with Apache Kafka 1.0 and later. You can connect Kafka producers and consumers directly to Event Hubs without code changes, making it easy to migrate from on-premises Kafka clusters to the cloud.

How much does Azure Event Hubs cost?

Pricing depends on the tier (Basic, Standard, Dedicated) and usage (throughput units, data volume). The Standard tier starts at $0.125 per TU per hour, with additional costs for data ingress/egress and optional features like Capture. The dedicated tier is priced based on cluster capacity.

Is data in Azure Event Hubs encrypted?

Yes, all data in Azure Event Hubs is encrypted at rest using Microsoft-managed keys. You can also enable customer-managed keys (CMK) via Azure Key Vault for greater control over encryption.

In summary, Azure Event Hubs is a powerful, scalable, and secure platform for real-time event ingestion and streaming. Whether you’re building IoT solutions, real-time analytics, or event-driven microservices, it provides the foundation for handling massive data volumes with low latency and high reliability. By understanding its architecture, features, and best practices, you can unlock its full potential and build responsive, data-driven applications in the cloud.

Recommended for you 👇

Further Reading: